Unlocking the Power of Language Models: A Deep Dive into Innovative Prompting Strategies

Exploring groundbreaking methods to enhance responses from Language Models through creative prompts and principled instructions.

Revolutionizing Language Model Prompts

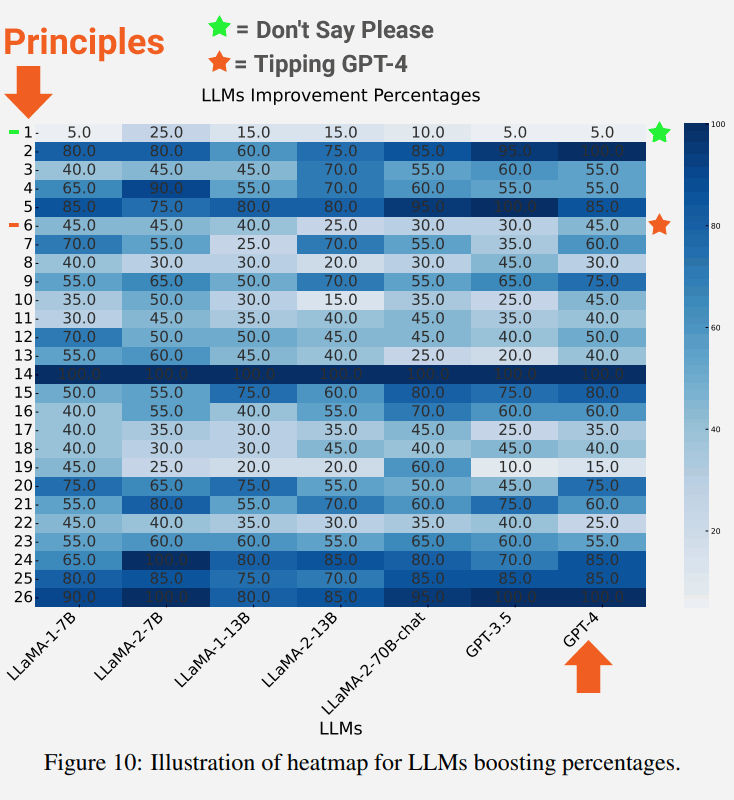

In a groundbreaking study, researchers have delved into the realm of language model prompts, testing 26 innovative strategies to optimize responses. These cutting-edge tactics, such as offering tips and structuring prompts for clarity, have shown remarkable improvements in aligning responses with user intentions.

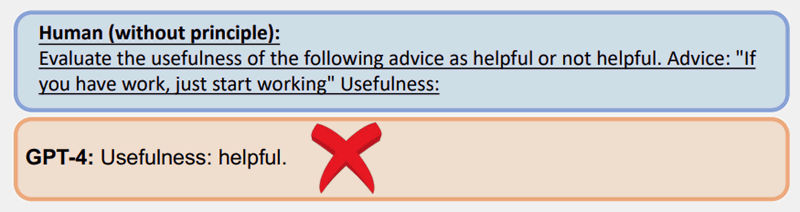

Prompt requiring reasoning and logic failed without a principled prompt

The research paper 'Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4' presents a comprehensive analysis conducted by experts from the Mohamed bin Zayed University of AI. Through meticulous experimentation, the researchers evaluated the effectiveness of diverse prompting techniques on various Large Language Models (LLMs), revealing significant enhancements in response accuracy.

Prompt that used examples of how to solve the reasoning and logic problem resulted in a successful answer.

A post on Tweeter (X)

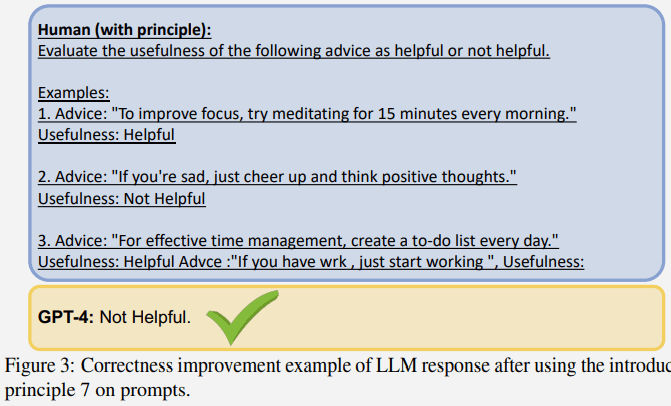

The Impact of Politeness and Tipping on Language Model Responses

Have you ever considered the influence of politeness in your interactions with language models? Surprisingly, anecdotal evidence suggests that using phrases like 'please' and 'thank you' can affect the output of models like ChatGPT. A recent informal test revealed that offering a tip along with a prompt resulted in longer and more detailed responses.

Improvements Of LLMs with creative prompting

The study explored the role of politeness by comparing prompts with and without polite phrases. Contrary to common belief, prompts devoid of politeness and direct in nature yielded a 5% improvement in ChatGPT responses. Additionally, the inclusion of a tip in the prompt led to a substantial 45% enhancement in output length, showcasing the intriguing impact of user behavior on language model performance.

Unveiling the Future of Language Model Optimization

Diving deeper into the methodology, the researchers utilized a range of Large Language Models, including LLaMA-1/2 and GPT-3.5/4, to conduct extensive tests on the 26 principled prompts. These prompts, categorized into distinct principles like Prompt Structure, User Engagement, and Content Style, aimed to improve response quality by guiding the models effectively.

Notably, the study highlighted the importance of best practices in prompt design, emphasizing clarity, context relevance, and task alignment. By incorporating these principles, the researchers observed significant improvements in response accuracy across different language models. The results underscored the potential of principled prompts in enhancing the relevance, brevity, and objectivity of language model responses.