Tree of Thoughts: A New Approach to Prompting by Google DeepMind Researchers

Google DeepMind researchers have published a new approach to prompting called Tree of Thoughts that outperforms other prompting methods. This article discusses the development of Tree of Thoughts, compares it against other prompting strategies, explores its inspiration from dual process models in human cognition, and presents the results and conclusions of the research.

Introduction

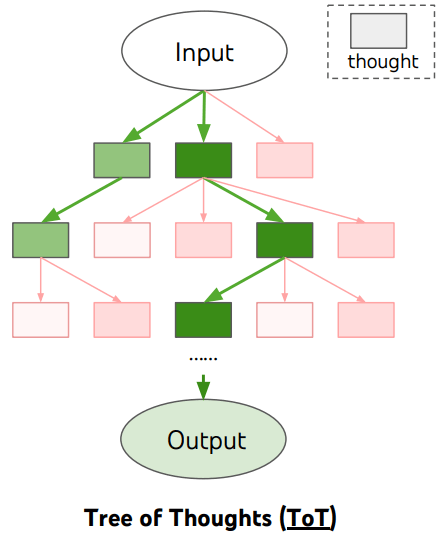

In the world of generative AI, prompting methods play a crucial role in obtaining better and more sophisticated responses from language models. Recently, researchers from Google DeepMind and Princeton University introduced a groundbreaking prompting strategy called Tree of Thoughts (ToT). This innovative approach aims to unlock more sophisticated reasoning methods and produce better outputs compared to existing prompting strategies like Chain of Thoughts (CoT) and Self-consistency with CoT.

Tree Of Thoughts Prompting For Better Generative AI Results

The ToT prompting framework utilizes a tree structure to guide the reasoning process of language models, allowing for a more deliberate and evaluative approach to problem-solving. The inspiration for ToT stems from the dual process models in human cognition, which propose two distinct decision-making processes—intuitive and deliberative. In this article, we delve into the development of Tree of Thoughts, compare it against other prompting strategies, explore its cognitive inspiration, and examine the results of the research.

Tree Of Thoughts Prompting For Better Generative AI Results

Comparing Prompting Strategies

The research paper on Tree of Thoughts compares the ToT prompting strategy against three other methods—Input-output (IO) Prompting, Chain of Thought Prompting, and Self-consistency with CoT. Each of these prompting strategies has unique characteristics and aims to guide language models in different ways to solve problems and generate coherent responses.

IO Prompting involves providing a language model with a problem to solve and obtaining the answer. It is a straightforward input-output approach that focuses on solving specific tasks.

Chain of Thought (CoT) Prompting, on the other hand, guides a language model to follow a logical sequence of thoughts, encouraging intermediate reasoning steps to solve problems. It emphasizes coherent and connected responses through a series of logical steps.

Self-consistency with CoT takes a different approach by prompting the language model multiple times and selecting the most commonly arrived at answer. This strategy leverages the intuition that complex reasoning problems often have multiple correct answers and reasoning paths.

Inspiration from Dual Process Models

The development of Tree of Thoughts is inspired by dual process models in human cognition, which propose two distinct decision-making processes—fast, automatic, unconscious thinking and slow, deliberate, conscious reasoning.

The ToT prompting framework utilizes a tree structure to simulate the deliberative and evaluative thinking process, allowing language models to explore multiple reasoning paths and make informed decisions at each step.

The researchers draw parallels between the intuitive, fast, and automatic decision-making process (System 1) and the deliberative, conscious thinking process (System 2), highlighting the intersection of cognitive models with contemporary language models.

Results and Conclusions

The researchers tested the ToT prompting strategy using a mathematical card game, Game of 24, and compared its performance against three other prompting methods. The results consistently demonstrated the superiority of ToT in producing better outcomes and more sophisticated reasoning.

However, the researchers also acknowledge that ToT may not be necessary for tasks that GPT-4 already excels at. They conclude that the deliberative problem-solving approach of Tree of Thoughts provides actionable methods for contemporary language models, bridging the gap between classical insights about problem-solving and the capabilities of modern AI.

The intersection of Tree of Thoughts with classical approaches to AI is identified as an exciting direction, offering new possibilities for solving complex problems and enhancing the creative capabilities of language models.